Trilinear Point Splatting (TRIPS) and its advantages over Gaussian Splatting and ADOP

Gif extracted from https://lfranke.github.io/trips/

Introduction

Since the great success of NeRF and Gaussian Splatting in the creation of digital twins of existing physical places for virtual production and immersive experiences, new approaches and innovative research have pushed the boundaries of visual realism even further.

In this article, we will have a look at “TRIPS”, or “Trilinear Point Splatting”, a new technique in the radiance fields realm that could represent the next step of visual fidelity for real-time rendered digital twins.

A Recap on Gaussian Splatting and ADOP

What is Gaussian Splatting?

If you're not familiar with Gaussian Splatting, don't worry! Check out this comprehensive article to gain a better understanding of how it works and stay informed about developments in this research field.

In short, 3D Gaussian Splatting is a sophisticated technique in computer graphics that creates high-fidelity, photorealistic 3D scenes by projecting points, or "splats," from a point cloud onto a 3D space, using Gaussian functions for each splat instead of the traditional “dots”. This produces more accurate reflections, colors, and refractions for the captured scene while providing performant rendering for the digital environment in real-time.

Despite its benefits and great performance improvements, especially when compared to traditional NeRF techniques, some of the resulting Gaussian Splats are affected by visual artifacts, such as “floaters” (pieces of the point cloud that are not visualized correctly and look like floating pieces of clouds in the wrong place) and blurry areas (especially where the input photos present small overlaps or fail camera alignment during the training process).

Luckily, workarounds exist to improve the visual quality of the final result, such as with “Deblurring 3D Gaussian Splatting”, which could be a promising alternative.

Gif extracted from https://benhenryl.github.io/Deblurring-3D-Gaussian-Splatting/

However, as of today the code for this deblurring technique is still a work in progress and not yet available on GitHub. I would recommend following the updates of this specific research, which in the future might offer good results in terms of visual realism and clarity for Gaussian splats.

What is ADOP?

Another state-of-the-art technique that deals with the problem differently is ADOP (Approximate Differentiable One-Pixel Point Rendering).

Source: https://github.com/darglein/ADOP

There is a public repository on GitHub that shows examples of its applications and offers the tools to train custom datasets. Among the detailed explanation and powerful UI developed for this project, the experimental ADOP VR Viewer is another interesting utility that uses OpenVR/SteamVR to visualize the final result in virtual reality.

Source: https://github.com/darglein/ADOP]

The ADOP pipeline supports camera alignment using COLMAP, which aligns with the workflow also supported by Nerfstudio. This involves computing the camera poses before training them with their training models, like Nerfacto and Splatfacto. To facilitate this process, the ADOP repository provides a "colmap2adop converter," which must be used before running the "adop_train" executable to generate the final result.

Despite its advantages, ADOP can be affected by temporal instability (which leads to some areas of the viewed environment changing depending on the angle of vision) and reduced visual performances due to the specific neural reconstruction network required for it to work, which is unable to effectively address large gaps in the point cloud or to deal with unevenly distributed data (as discussed in their research paper).

Wouldn’t it be great to have an alternative that combines the approach of Gaussian Splatting and ADOP to achieve better results? Enter TRIPS, the new Trilinear Point Splatting technique for Real-Time Radiance Field Rendering.

Introducing TRIPS

Developed by a team from Friedrich-Alexander-Universität Erlangen-Nürnberg, TRIPS combines the best of Gaussian Splatting and ADOP with a novel approach that rasterizes the points of a point cloud into a screen-space image pyramid.

Technical Innovations of TRIPS

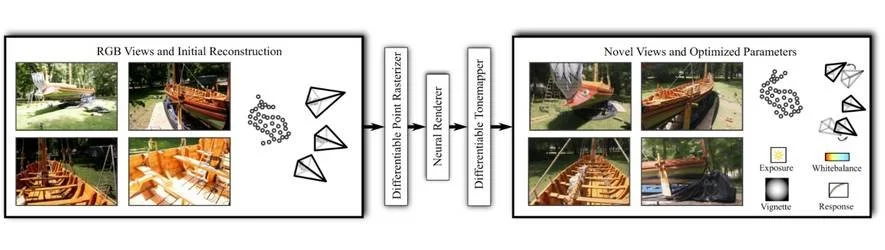

Source: https://lfranke.github.io/trips/

In contrast to Gaussian-shaped splats, TRIPS renders a point cloud using cubic (or volumetric) splats, defined trilinearly as 2x2x2 blocks, into multi-layered feature maps, which are then processed via a differentiable pipeline, and rendered into a tone-mapped final image.

Each Trilinear Point Splat holds several pieces of information, namely:

The neural descriptors of the Point (colors).

The transparency of the Point.

The position in space of the Point.

The world space size of the Point.

Camera intrinsic parameters (lens parameters, aspect ratio, camera sensor parameters).

The extrinsic pose of the target view.

A novelty in their method is that they “do not use multiple render passes with progressively smaller resolutions” (as explained in their research paper) since it would cause severe overdraw in the lower resolution layers. Instead, their paper explains that they only “compute the two layers which best match the point’s projected size and render it only into these layers as 2x2 splat”.

Trilinear point splatting – image source: https://lfranke.github.io/trips/

The core of TRIPS involves projecting each point into the target image and assigning it to a specific layer of an image pyramid based on the point's screen space size and point size. Larger points are written to lower-resolution layers, which cover more space in the final image. This organization is facilitated by a trilinear write operation, optimizing the rendering of point clouds by efficiently managing spatial and resolution variability.

This technique shows a conceptual resemblance to hierarchical z-map-based occlusion culling and Forward+ lighting. Despite their operational differences (occlusion culling efficiently manages visibility through depth hierarchies, and Forward+ optimizes lighting by organizing light sources relative to the camera's view frustum), each approach relies on a foundational strategy: optimizing processing through the distribution of data across hierarchical structures. TRIPS accomplishes this via an image pyramid, hierarchical z-map-based occlusion culling through depth layers, and Forward+ lighting through spatial decomposition of the scene. These methodologies demonstrate the versatility and effectiveness of hierarchical data structures in enhancing rendering performance across various domains.

The complete rendering pipeline adopted by TRIPS at a high level is:

Given a set of input images with camera parameters and a dense point cloud, which can be obtained through methods like multi-view stereo or LiDAR sensing.

Project the neural color descriptors of each point into an image pyramid using the TRIPS technique mentioned above.

Each pixel on each layer of the pyramid stores a depth-sorted list of colors and alpha values that are then blended together resulting in a specific pixel value for each layer of the pyramid.

Each layer (“feature layer”) of the resulting pyramid is then given to a neural network, which computes the resulting image via a gated convolution block.

A final post processing pass applies exposure correction, white balance, and color correction through a physically based tone mapper.

For more technical information about the mathematical explanation of the approach introduced by TRIPS, I recommend reading the original research paper.

Comparative Analysis

The innovative rasterization approach and the “differentiable trilinear point splatting” introduced by TRIPS allows it to preserve fine details in the reconstructed digital twin, avoiding the blurriness of an equivalent Gaussian Splatting generated scene, whilst also reducing the amount of floaters.

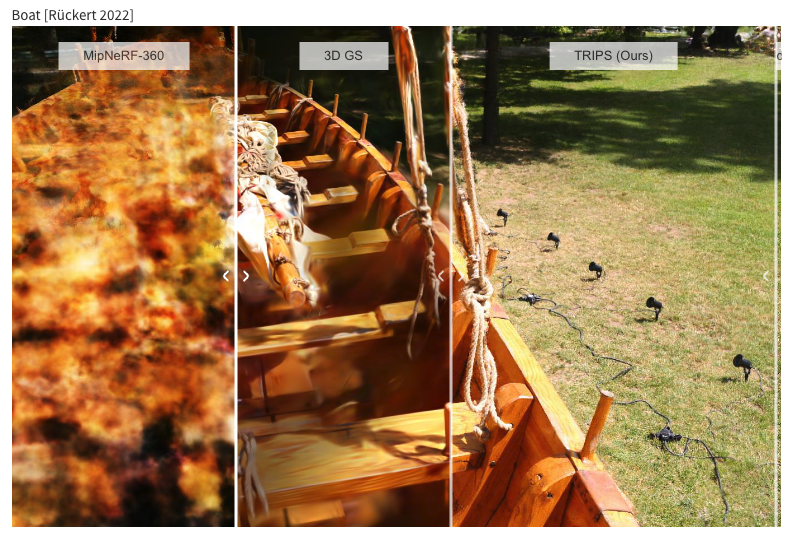

3D Gaussian Splatting (3D GS) vs TRIPS – gif extracted from https://lfranke.github.io/trips/

In addition, the temporal stability of TRIPS is an improvement over ADOP. This means that the final result more accurately visualizes the scene; by showing more coherent images between different angles of vision, each object is more distinguishable and visually stable.

ADOP vs TRIPS – gif extracted from https://lfranke.github.io/trips/

In the GIF above, the temporal stability of TRIPS over ADOP can be specifically noticed when looking at the grass, or at the leaves of the trees in the background, as an example.

The TRIPS repository provides many more comparisons of the approach versus traditional Gaussian Splatting, ADOP, and additionally MipNerf-360. In terms of the fine details recovered after training, the clear winner is TRIPS, with Gaussian Splatting in second place.

Example showing how MipNerf-360 compares to the more recent Gaussian Splatting and TRIPS training models - gif extracted from https://lfranke.github.io/trips/

It is also worth mentioning that TRIPS can render the scene at around 60 frames per second, depending on scene complexity and resolution. The only caveat is that camera intrinsics and extrinsics need to be estimated apriori (for example via COLMAP).

Applications for TRIPS

When we consider applications of TRIPS in the real world, there are many opportunities, most of which are similar to those afforded by Gaussian Splatting, which you can find in my previous article on the topic.

In architecture and real estate industries, TRIPS allows for immersive virtual tours, allowing clients to explore properties in stunning detail before construction or renovation.

In the entertainment industry, TRIPS could enhance virtual production, providing filmmakers with more realistic and interactive environments for movies or video games.

Additionally, in cultural heritage preservation, TRIPS could offer a tool for creating detailed digital twins of historic sites, enabling virtual exploration and aiding in restoration efforts by capturing and visualizing intricate details with unprecedented clarity.

In Conclusion

It has only been a couple of weeks since the release of the source code for TRIPS, which has already triggered a lot of interest in this novel technique. It’s great to see that the author Linus Franke has been very active in updating the page instructions and resolving issues reported by the community.

So far the visual results look very promising, even if some users report that the required training times could be significantly longer compared to other techniques. As an example, when using the “mipnerf360 garden” dataset, it could take around 12 hours of computation on a Desktop NVIDIA RTX 3090 GPU to complete one-sixth of the overall computation (according to a report on GitHub). As a response to these reported times, the author mentioned that on an NVIDIA RTX 4090 GPU, the training for the same dataset would instead take around four hours, which reflects the demanding computational power required to produce the final training results.

I’m sure we’ll see more improvements in the coming weeks for this repository, which could potentially make TRIPS the new king of real-time radiance field rendering and digital twin visualization.

I cannot wait to see where this technology will lead us, and I will definitely keep a star on GitHub for this repository. Congratulations to the authors of this fantastic research, they deserve gratitude from the open-source community for the great effort they put into pushing the boundaries of visual realism and digital technology even further.

I hope this article has helped you discover something new and has inspired you to research even more about this topic.

Feel free to follow me and Magnopus for further updates on the latest technological novelties and interesting facts. Thanks for reading and good luck with your projects!